Our SaaS Journey

- Assaf Sauer

- Mar 12, 2025

- 4 min read

There’s no guide for building a SaaS or running it effectively.

Most companies, when designing a SaaS solution, default to managed services on the assumption that—despite higher costs—they simplify everything.

Today, I want to prove to you that running SaaS on a single declarative Kubernetes cluster is a genuine game-changer.

It’s not just about cutting costs, being vendor lock-in free, or multi-cloud agnostic—it’s about centralizing everything (security, ops, automation and more) in a fully declarative setup. That means complete control, swift rollbacks and the freedom to continuously improve your system as it evolves.

Our SaaS Journey with Stacktic

When we first completed Stacktic using local Docker environments, we could finally design our SaaS and move our platform to Kubernetes—exactly what our platform was built for. It was an exciting moment to put our platform to the test for the very first time with real prod environment just like our customer will do.

Let’s start with the end—this is how our full stack SaaS looks like (for security reasons, some components were removed):

Here are the main challenges we needed to overcome:

Lots of different environments

Beyond staging and production dev envs, we also have dedicated environments for customer PoCs, each with specific versions.

Integrating AI agents

We require flexible infrastructure that can adapt quickly. Right now, we’re using OpenAI’s Agent and also testing Llama, though we mainly rely on OpenAI due to resource constraints in developing our own large language model.

Database modification in prod

In Stacktic, we often modify production database tables when rolling out new app versions. This is a huge risk for our production SaaS.

Strict app security (Kubebench guidelines and industry best practices)

Extremely Strict Security. We must follow best practices without slowing down development or blocking capabilities .

The obvious

Alerts, metrics, logs and monitoring must be done efficiently to keep track of everything from key components like Keycloak and cert-manager to security logs.

Additional hidden challenges we discovered later

Automated data migrations. We frequently need to move data from one environment to another.

Rapid technology changes. The stack evolves quickly, so we need the ability to add or roll back services (messaging, databases, etc.) without disruption.

Local development environments. Setting up local dev using IDE/Docker grew complex over time—maintenance of microservices locally became a headache.

How we tackled these challenges

Lots of different environments

Drag and drop environments. You can duplicate environments in mono repo within the same stack or create dedicated stacks for different needs.

Integrating AI agents

we use the OpenAI SDK for Python to handle calls to the OpenAI API. We secured it with connection management to other components (like the bucket store where relevant data is kept).

Database modification in prod

Our solution is relatively simple: we do weighted traffic splitting (like a Canary release). We split traffic between our production containers and the updated the databases of the containers. Then we remove traffic from the old production containers, apply our database modifications, and swap them back in.

Strict app security (kubebench guidelines and industry best practices.)

Stacktic automates security policies. We simply labeled certain policies as “production” and enforced them there, so they don’t become a bottleneck during development. Additional security measures are also in place, though I won’t detail them all here for security reasons.

The obvious

Alerts, metrics, logs, and versioning. Observability has been straightforward so far: we use Prometheus and Grafana dashboards, fully automated. Soon, we’ll add more advanced telemetry. For log aggregation, we use Loki integrated with Grafana. This setup gives us centralized security logs and basic alerts and dashboards for essential components.

Automated data migrations

Once we realized how often we needed to replicate databases for dev, stage, and production, we created automation k8s jobs that replicate and migrate data between these environments automatically.

Rapid technology changes

We thrive on the ability to experiment—adding new services or rolling back quickly. Often we just duplicate a stack and spin it up on another cluster for a “playground.” Everything is managed in one monolithic Git repository, which keeps things simpler than it sounds.

Local development environments

Eventually, we realized we should work directly with containerized apps rather than complex local Docker setups. Our favorite combo is Tilt with Argo CD image updates. Essentially, these tools sync your IDE with the containerized app in real time. I’m still puzzled why people still stick to fully local Docker environments, given all the complexity and time..

Final thoughts

I’m not sure we followed every best practice or conformed perfectly to recognized standard. But we ended up with a SaaS that’s flexible, built for change, and fully automated—covering migrations, environments, security, versioning and more. Best of all, we can deploy it all with a single command (or gitops toggle button)

kubectl apply -k k8s/deploy/over/dev

...and it works anywhere , any cloud or on-perm.

So, let’s explore what would have happened if we had chosen to build Stacktic entirely on managed services... should look something like this:

Combining Kubernetes with external managed services is the perfect recipe for undermining simplicity and duplicating efforts.

You end up fragmenting automation, security, and more—losing the benefits of a single, centralized declarative solution.

Duplicate automation

You end up mixing GitOps,and CI with IaC like CodeDeploy or terraform, own declarative approach, and more—leading to repetitive or conflicting processes.

Fragmented security

Tools like OPA and RBAC may already be set up within Kubernetes, but then you add GuardDuty, IAM, etc, creating multiple overlapping security layers that don’t integrate well at all…

Scattered logging

If you already use Loki and then add CloudWatch, you’re splitting logs across different platforms, complicating everything from observability ,queries and operation.

And that’s before even considering the costs, operational overhead, and the constraints that limit flexibility and adoption of new technologies.

As for auto scaling and CI—let’s save that for the next blog post.

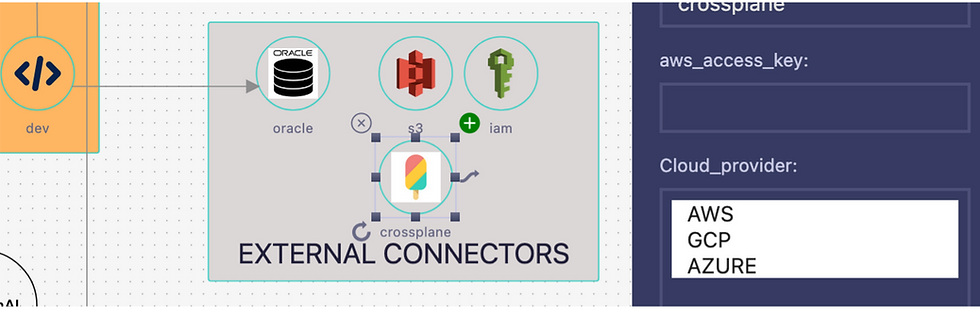

If you’re determined to use managed services or external Kubernetes components, why not centralize them through Stacktic so everything stays managed in one place—and chaos is avoided altogether?

lets keep it for the next blogs :-)

.png)

.png)

Comments